Incident Generation and Runbook Execution

Introduction

This topic describes in detail ideas introduced in Connecting a Customer Environment to Riverbed IQ Ops SaaS :

-

Key measurements

A measurement or data point that is monitored and analyzed to detect anomalies and generate incidents. are modeled by ingest & analytics

A measurement or data point that is monitored and analyzed to detect anomalies and generate incidents. are modeled by ingest & analytics -

Anomalous

An unexpected event or measurement that does not match the expected model. measurements generate associated indicators

An unexpected event or measurement that does not match the expected model. measurements generate associated indicators An observed change in a specific metric stream that is recognized as being outside of an expected model. Indicators are correlated into triggers, and one or more triggers are grouped into incidents.

An observed change in a specific metric stream that is recognized as being outside of an expected model. Indicators are correlated into triggers, and one or more triggers are grouped into incidents. -

Correlation groups assemble indicators into detections

One or more indicators that are correlated and may act as a trigger for incident creation or runbook execution. that form the basis of incidents

One or more indicators that are correlated and may act as a trigger for incident creation or runbook execution. that form the basis of incidents A collection of one or more related triggers. Relationships that cause triggers to be combined into incidents include application, location, operating system, or a trigger by itself.

A collection of one or more related triggers. Relationships that cause triggers to be combined into incidents include application, location, operating system, or a trigger by itself. -

Incidents trigger

A set of one or more indicators that have been correlated based on certain relationships, such as time, metric type, application affected, location, or network device. Runbook

A set of one or more indicators that have been correlated based on certain relationships, such as time, metric type, application affected, location, or network device. Runbook An automated workflow that executes a series of steps or tasks in response to a triggered event, such as the detection of anomalous behavior generating an incident, a lifecycle event, or a manually executed runbook. executions

An automated workflow that executes a series of steps or tasks in response to a triggered event, such as the detection of anomalous behavior generating an incident, a lifecycle event, or a manually executed runbook. executions

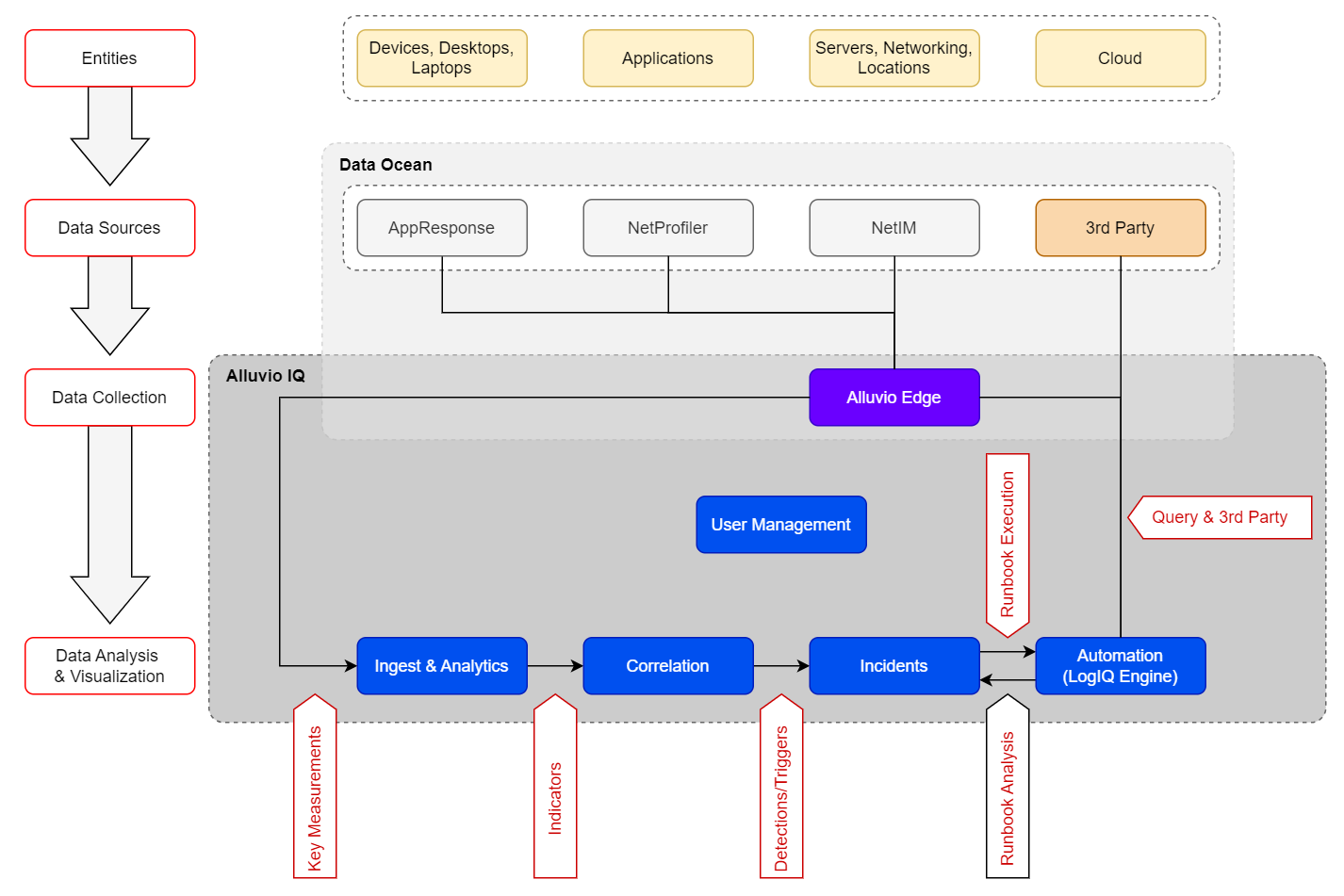

This diagram illustrates how information flows and is processed to become an incident that executes a Runbook.

Incident Generation and Runbook Execution

Data Sources: Key Measurements

The key measurements flowing into a customer’s Riverbed IQ Ops tenant depend directly on the data sources present in the customer environment and connected to that Riverbed IQ Ops tenant.

The key measurements streamed from each data source type are:

Pipeline Inputs: Key Measurements

|

Data Source |

Entity[s] |

Metric |

|---|---|---|

|

NetProfiler |

Application / Client Location |

User Response Time (see Table Note-1 below) |

|

MoS |

||

|

Interface |

In Utilization |

|

|

Out Utilization |

||

|

AppResponse |

Application / Client Location |

User Response Time |

|

Throughput (see Table Note-2 below) |

||

|

% Retrans Packets |

||

|

% Failed Connections |

||

|

NetIM |

Device |

Device Status |

|

Device Uptime |

||

|

Interface |

Interface Status |

|

|

In Packet Error Rate |

||

|

Out Packet Error Rate |

||

|

In Packet Drops Rate |

||

|

Out Packet Drops Rate |

||

|

In Utilization |

||

|

Out Utilization |

||

| Aternity | Application / Client Location | Activity Network Time |

| Activity Response Time | ||

| Page Load Network Time | ||

| % Hang Time | ||

|

Table Notes: |

||

|

1 - [Metric: User Response Time] is: > An approximation of AppResponse [user-response-time] because NetProfiler does not currently account for [connection_setup_time], while AppResponse does. > Only processed for named applications (e.g., excludes: ICMP, SNMP, TCP_Unknown, and UDP_Unknown) 2 - [Metric: Throughput] is monitored only for VoIP-related applications: {VOIP, SIP, RTP}. |

||

Ingest & Analytics: Indicators

Overview

For each key measurement flowing into a customer's Riverbed IQ Ops tenant, Ingest & Analytics either has a simple model (e.g., Static Threshold) or builds a more dynamic, complex model (e.g. Time Series Baseline) of that key measurement's behavior, and any significant variance from that model is classified as an anomaly (hence, the modeling technologies are called anomaly detection algorithms). Riverbed IQ Ops generates an indicator for each anomaly detected (the indicator contains contextual information associated with the anomaly that can be leveraged by further processing).

Anomaly Detection Algorithms

Riverbed IQ Ops supports the following anomaly detection algorithms:

Static Threshold (ThresholdDetector)

-

IQ ThresholdDetector models configured simple thresholds. It can be configured to model "too-high" thresholds and/or "too-low" thresholds.

-

When configured to model “too-high” thresholds: any [observed-value] that exceeds the model's [high-threshold] is classified as an anomaly.

-

When configured to model “too-low” thresholds: any [observed-value] that falls below the model's [low-threshold] is classified as an anomaly.

-

-

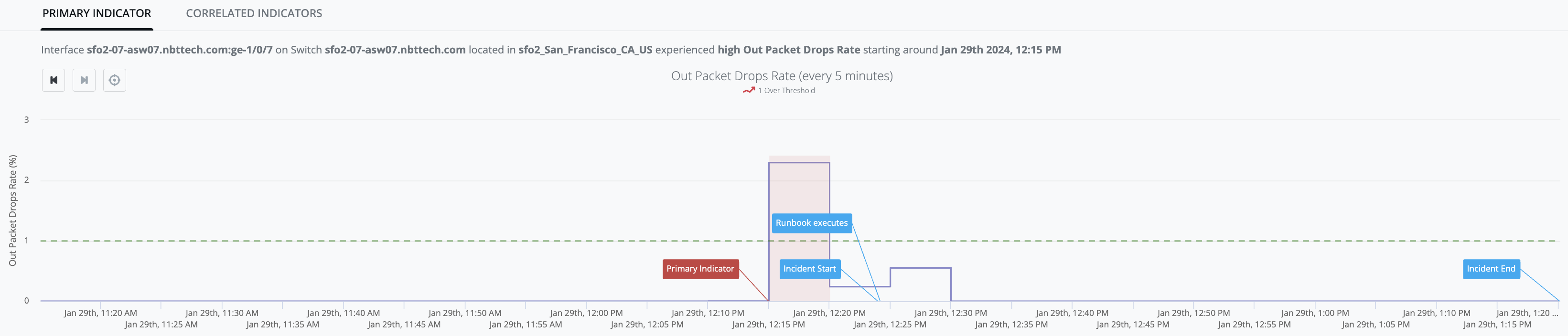

Example Graph: [out_packet_drops_rate] observed values stay below the [high-threshold] up until Jan-29th, 12:15PM when [observed-value: 2.3%] exceeds the [high-threshold: 1%] and generates an Indicator (red-zone):

Time-series Baseline (BaselineDetector)

-

IQ BaselineDetector model leverages Season/Trend/Level (STL) history/data to predict an [expected-range] of values (i.e. “green-river”) within which it expects the next [observed-value] to lie. The initial model is built using twodays of data (i.e., models daily-seasonality), and is replaced by a final model after two weeks of data (i.e. models weekly-seasonality). The model is updated with each new [observed-value].

-

Any [observed-value] that falls above of the predicted [expected-range] will be classified as an anomaly.

-

Any [observed-value] that falls below the predicted [expected-range] will be classified as an anomaly.

-

-

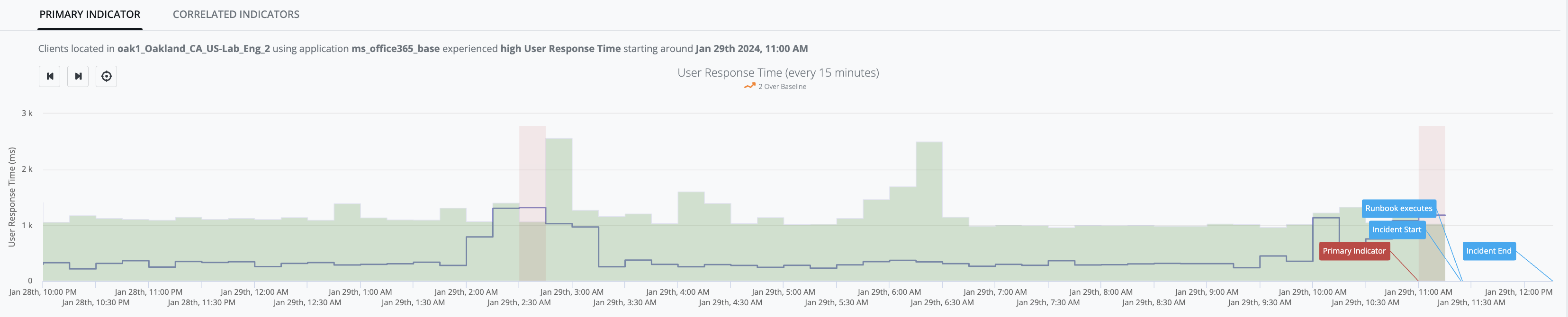

Example Graph: [user_response_time] observed-values stay within the green river up until Jan-29th, 11:00AM when [observed-value: 1.18s] exceeds the [expected-range: 0ms - 1.02s] and generates an Indicator (red-zone):

Dynamic Threshold (DynamicThreshold)

-

DynamicThresholdDetector models a target dataset using an exponential distribution that is “fitted” to the target dataset in order to profile the pattern. This profile is then used to calculate the [dynamic-threshold-value] for the dataset when a new [observed-value] arrives. The model is updated with each new [observed-value].

-

Any [observed-value] that falls above the calculated [dynamic-threshold-value] will be considered anomalous.

-

-

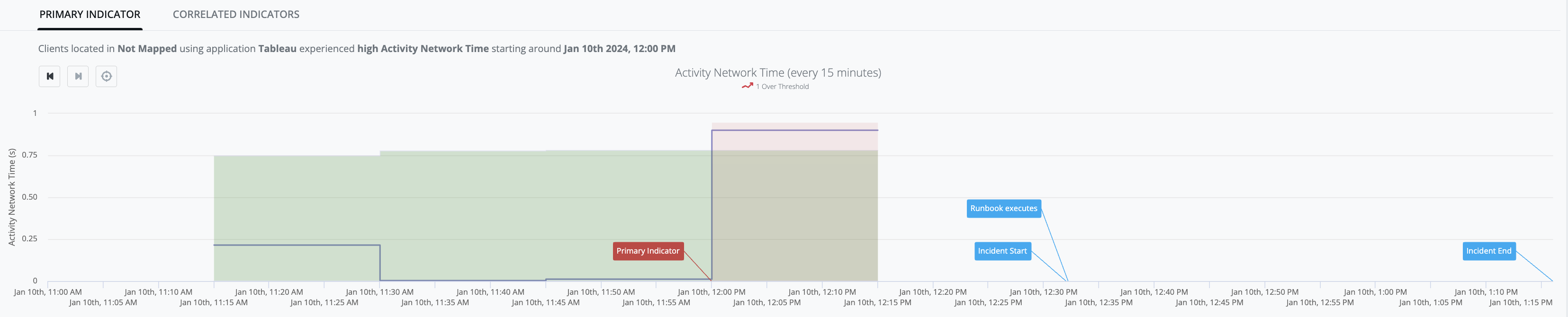

Example Graph: [activity_network_time] observed values stay within the green-river up until Jan-10th, 12:00PM when [observed-value: 0.90s] exceeds the [dynamic-threshold-value: 0.78s] and generates an Indicator (red-zone):

Bounded Dynamic Threshold (BoundedDynamicThreshold)

-

BoundedDynamicThresholdDetector models a target dataset using a statistical machine learning algorithm that is fitted to the target dataset to profile the pattern. This algorithm takes advantage of the fact that the target dataset values must be bounded between 0% and 100%. This profile is then used to calculate the [bounded-dynamic-threshold-value] for the dataset when a new [observed-value] arrives. The model is updated with each new [observed-value].

-

Any [observed-value] that increases in an unusual way and exceeds the calculated [bounded-dynamic-threshold-value] will be considered anomalous and generates an IQ Ops Incident.

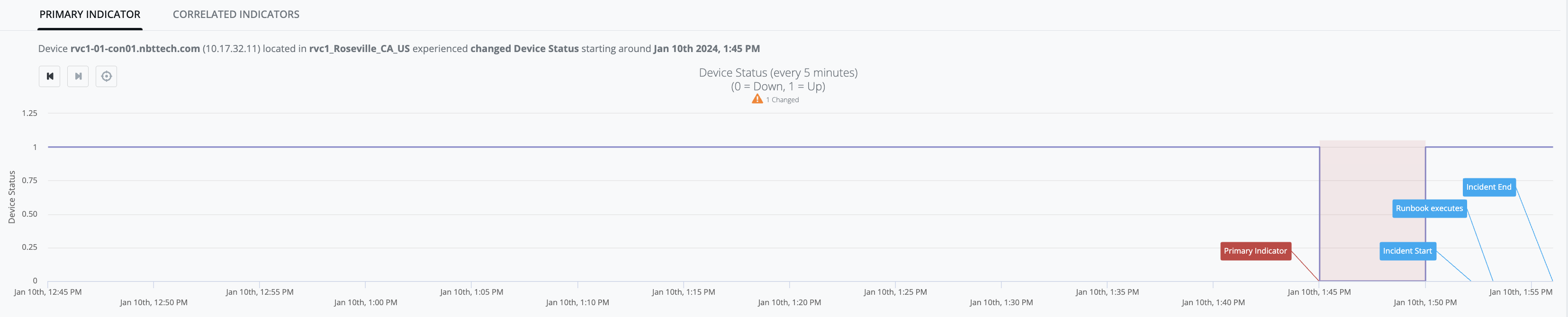

State Change (ChangeDetector)

-

IQ ChangeDetector model does not make any dynamic predictions. Instead, it models a configured [expected-value], and any state change in [observed-value] that does not match [expected-value] is treated as an indicator of bad behavior.

-

Example Graph: [device-status] observed-value is consistently up ([observed-value: 1]) up until Jan-10th, 1:45PM:

-

then [observed-value: 0] occurs (i.e. state-change from prior [observed-value: 1]), and

-

the result of that state-change is that [device-status] no longer matches [expected-value: 1] and generates an Indicator (red-zone):

-

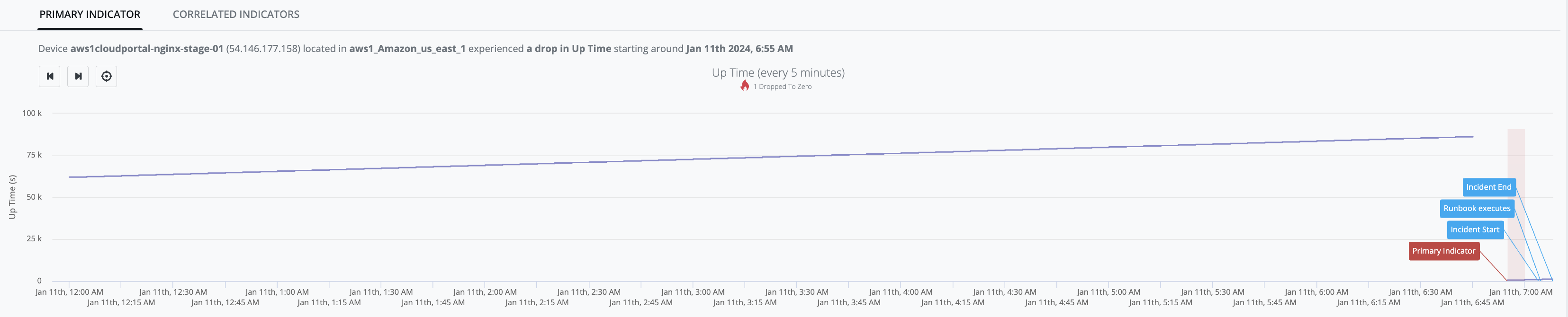

Continuously Increasing (AlwaysIncreasingDetector)

-

AlwaysIncreasingDetector models a system where the dataset has ever-increasing values during normal operation.

-

Any [observed-value] that is less than [previous-value] is treated as an indicator of bad behavior.

-

-

Example Graph: [device_up_time] observed-values continue to increase up until Jan-11th, 6:45AM when [observed-value: 23h 59m 16s] - then there is absence of samples for 10-minute period, and when the next sample arrives [observed-value: 9m 10s], is determined to be less than the last known measurement (i.e. 23h 59m 16s) and an indicator is generated (red-zone):

Current Key Measurements and Associated Anomaly Detection Algorithms

For each key measurement flowing into a customer's Riverbed IQ Ops tenant, Ingest & Analytics has been pre-configured apply specific anomaly detection algorithms.

The association of key measurements to anomaly detection algorithms is shown here:

Riverbed IQ Ops Analytics Pipeline - Quick Reference

|

Data Source |

Entity |

Metric |

[1] Change Detection |

[2] Always Increasing |

[3] Thresholds |

[4] Baselines |

[5] Dynamic Threshold |

Bounded Dynamic Threshold |

|---|---|---|---|---|---|---|---|---|

|

NetProfiler |

Application / Client Location |

User Response Time1 |

|

|

|

|

|

|

|

MoS |

|

|

|

|

|

|

||

|

Interface |

In Utilization |

|

|

|

|

|

|

|

|

Out Utilization |

|

|

|

|

|

|

||

|

AppResponse |

Application / Client Location |

User Response Time1 |

|

|

|

|

|

|

|

Throughput2 |

|

|

|

|

|

|

||

|

% Retrans Packets |

|

|

|

|

|

|

||

|

% Failed Connections |

|

|

|

|

|

|

||

|

NetIM |

Device |

Device Status |

|

|

|

|

|

|

|

Device Uptime |

|

|

|

|

|

|

||

|

Interface |

Interface Status |

|

|

|

|

|

|

|

|

In Packet Error Rate |

|

|

|

|

|

|

||

|

Out Packet Error Rate |

|

|

|

|

|

|

||

|

In Packet Drops Rate |

|

|

|

|

|

|

||

|

Out Packet Drops Rate |

|

|

|

|

|

|

||

|

In Utilization |

|

|

|

|

|

|

||

|

Out Utilization |

|

|

|

|

|

|

||

| Aternity | Application / Client Location | Activity Network Time |

|

|

|

|

|

|

| Activity Response Time |

|

|

|

|

|

|

||

| Page Load Network Time |

|

|

|

|

|

|

||

| % Hang Time |

|

|

|

|

|

|

||

|

Notes: |

||||||||

|

1 - [Metric: User Response Time] is:

AppResponse User Response Time calculation: [user-response-time] = ([connection_setup_time] / [connection_setup_time_n]) + ([request_network_time] / [request_network_n]) + ([response_network_time] / [response_network_n]) + ([server_delay] / [server_delay_n]) NetProfiler User Response Time calculation: [user-response-time] = + ([request_network_time] / [request_network_n]) + ([response_network_time] / [response_network_n]) + ([server_delay] / [server_delay_n])

2 - [Metric: Throughput]: only monitored for VoIP-related “applications”: {VOIP, SIP, RTP}. 3 - [Anomaly Detection Algorithms]: for compounded Anomaly Detection Algorithms an Incident is only generated when both Algorithms detect anomalous behavior. |

||||||||

Correlation: Detections

Every indicator generated by Ingest & Analytics is further processed by Correlation to identify associations/commonalities that will enable them to be grouped together into a single detection (that will eventually be surfaced as an incident).

As [Correlation] groups related Indicators together, it also has to identify which Indicator is “Primary” (i.e. the leading Indicator of a problem). The Primary Indicator is also the basis upon which an Incident is generated - and also determines which Runbook will be executed). All other Indicators in the Detection take on a supporting-role as “Correlated” Indicators.

Incidents

Overview

Riverbed Console surfaces anomalous events (represented by a Detection) in an Incident report.

For new events, Riverbed Console generates a new Incident to contain the event-information gathered so far (i.e. the Detection) - and so an Incident contains Primary Indicator information, and Correlated Indicators information (if any exist). Whenever a new Incident is created, an associated Runbook is automatically executed and its output is associated with the Incident (in Runbook Analysis section).

For recurring events, Riverbed Console matches the recurrent Detection to an existing active Incident. Runbooks are not automatically executed for recurrences.

Each Incident is a combination of the information gathered throughout the Analytics Pipeline (i.e. Detection, Primary Indicator, and Correlated Indicators), and the result of the Runbook-execution (i.e. Impacts, Prioritization, Prioritization Factors, and Runbook Analysis).

Basic Anatomy of an Incident

Each Incident report contiains is a combination of the information gathered throughout the Analytics Pipeline (i.e. Detection, Primary Indicator, and Correlated Indicators, …), and the result of the Runbook-execution (i.e. Impacts, Prioritization, Prioritization Factors, and Runbook Analysis). The content generated by Runbook-execution will likely change for each instance of a Runbook-execution.

Please refer to the diagram below for a high-level view of the basic anatomy of an Incident (i.e. what information it contains, and the source of that information).

-

Section: <Header>: Top-level summary information for this Incident:

-

[Priority]: The default is [Priority: Low] when Incident is created, and then [Priority] is updated based on analysis performed during Runbook Execution. Ultimately, it is the Runbook logic/analysis that determines [Priority].

-

[Description]: This information is gleaned from the Primary Indicator provided by Correlation::Detection (e.g. "MS Teams at Boston shows an increase in % Retrans Packets").

-

-

Section: <Management/Lifecycle>: Information/tools related to the Incident and its lifecycle.

-

[State]: [State: New] when an Incident is created. Users can change the [State] to represent the phase an Incident is in its lifecycle.

-

[Start Timestamp]: This is the timestamp when an Incident is created.

-

[End Timestamp / Ongoing]: This timestamp represents when an Incident ends (i.e. no recent recurrence of an Indicator) - AND: is flagged as [Ongoing] for Incidents that have not yet ended.

-

[Notes]: provides access to Note-tool that provides ability for Users to provide comments on an Incident. Clicking on this tool will display history of [Notes] for review, and ability to add more [Notes].

-

[Activity Log]: provides access to Logging-tool that provides Users visibility to activity associated with an Incident. Clicking on this tool will display listing of [Activity Log] - e.g. "Runbook status changed to Completed", with timestamp.

-

[Share]: provides quick/easy access to the unique URL associated with a specific Incident. Clicking on this tool will provide the User the opportunity to [Copy Link] into their Copy-Paste buffer.

(Note: User can also copy the URL from their Web-browser to share a specific Incident).

-

-

Section: [Impact Summary]: Renders information generated by Runbook logic that assesses the potential business impact of an Incident.

Note: If there is a large listing of impacts, then a View All link will appear in the lower-right of the card so the full list can be displayed.-

[Impacts: Users]: a card that provides listing of Users potentially impacted by this Incident (based on assessment made by associated Runbook execution).

-

[Impacts: Locations]: a card that provides listing of Locations potentially impacted by this Incident (based on assessment made by associated Runbook execution).

-

[Impacts: Applications]: a card that provides listing of Applications potentially impacted by this Incident (based on assessment made by associated Runbook execution).

-

-

Section: [Prioritization Factors]: Listing of information used by Runbook logic to determine [Priority] of an Incident.

-

Section: [Incident Source]: Renders the components of the Detection built by Correlation:

-

[Primary Indicator]: this represents the anomaly that Correlation identified as the likely primary cause for this Incident, and includes supporting information:

-

Textual summary of the Entity/Metric/Location and Timestamp for the anomaly.

-

Graphical representation of the model (recent history), with:

-

annotations to indicate:

-

[Primary Indicator]: this is when/where Anomaly Detection Algorithm detected an unexpected Key Measurement value.

-

[Incident Start]: this is when/where an Incident was generated to surface this event. (There are propagation and processing delays that result in [Incident Start] after [Primary Indicator])

-

[Runbook Executes]: this is when/where the associated Runbook is executed to: gather additional data/context from the Customer Environment (Data Sources, and 3rd Party), apply logic/analysis to assess impact/prioritization, and likely cause. It is the result of a Runbook execution that is rendered in Section: [Runbook Analysis] of an Incident.

-

[Incident End]: this is when/where an Incident ends after a period in which either the Anomaly is recovered (e.g. Device returns to [Status: UP]) or there has not been a recent recurrence of the anomaly.

-

-

… and tools to navigate through the graphical model-history:

-

[Rewind-button]: expand the graphical model-history view to encompass an additional earlier time-period (in chunks of 6-hours per button press).

-

[FastForward-button]: narrow the graphical model-history view to encompass an more recent time-period (in chunks of 6-hours per button press).

-

[Home-button]: returns to default view of graphical model-history.

-

-

-

-

[Correlated Indicators]: this is a listing of one or more anomalies/Indicators that Correlation identified as having some relationship/association with the Primary Indicator for this Incident, and includes supporting information:

-

Correlated Indicator high-level summary/history information:

-

Summary Count of the number of Correlated Indicators.

-

Graphical Summary depicting “Count of Indicators Over Time”.

-

-

Per Correlated Indicator detail information - including:

-

Entity Name & Details, Entity Type, Metric (the Key Measurement that exhibited anomalous behavior), Indicator Type (type of anomaly detected), Expected Range (the Key Measurement value that the model expected for this Entity/Metric), Observed Value (the Key Measurement value that was observed for this Entity/Metric), Deviation (variance from expected range), and a timestamp for when Correlation of this Indicator began.

-

Graphical representation of the model (recent history), with:

-

annotations to indicate:

-

[Incident Start] - this is when/where an Incident was generated to surface this event. (There are propagation and processing delays that result in [Incident Start] after [Primary Indicator])

-

[Runbook Executes] - this is when/where the associated Runbook is executed to: gather additional data/context from the Customer Environment (Data Sources, and 3rd Party), apply logic/analysis to assess impact/prioritization - and likely cause. It is the result of a Runbook execution that is rendered in Section: [Runbook Analysis] of an Incident.

-

[Correlation Starts] - this is when/where Correlation grouped this Indicator into the Detection that formed the basis for this Incident.

-

-

… and tools to navigate through the graphical model-history:

-

[Rewind-button] - expand the graphical model-history view to encompass an additional earlier time-period (in chunks of 6-hours per button press).

-

[FastForward-button] - narrow the graphical model-history view to encompass an more recent time-period (in chunks of 6-hours per button press).

-

[Home-button] - returns to default view of graphical model-history.

-

-

-

-

-

-

Section: [Runbook Analysis]: Listing of Runbook information - including overall summary information, and per-execution detail information:

-

<Runbook - Overall Summary Information>

-

[Trigger Summary]: This is information generated by Correlation based on the source Entity-type in the Primary Indicator - and is used to identify the appropriate Runbook to associate for execution.

-

[Runbook/History]: This is a pick-list of the Runbook executions associated with this Incident.

-

Typically there is a single Runbook execution associated with an Incident (i.e. the Runbook execution that was triggered when the Incident was first generated).

-

The User can [Rerun] a Runbook over the course of triaging/analyzing an Incident (e.g. to gather a more current view of the analysis), these additional Runbook executions will appear in the pick-list.

-

For each Runbook execution the pick-list entry will contain:

-

Runbook execution determination of [Priority] for this particular run.

-

Runbook Name (the name of the Runbook that was executed for this Incident).

-

Timestamp for when the Runbook execution occurred.

-

-

-

[Runbook/Execution-status]: This is rendered as a small icon that, when clicked, pops-up a [Runbook Errors, Warnings, and Variables] window that provides additional detail with respect to the Runbook execution:

-

[exclamation-in-red-circle]: for Runbook executions that "Completed with Errors"

-

[info-in-green-circle]: for Runbook executions that "Completed".

-

-

[Runbook/Execution-disposition]: this describes the level of success for a Runbook execution:

-

[Last Run: Completed]: All Runbook nodes/logic executed as expected.

-

[Last Run: Completed With Errors]: There were issues encountered with the Runbook execution. Additional detail regarding those issues may be discovered in [Runbook/Execution-status] icon that pops-up a [Runbook Errors, Warnings, and Variables] window.

-

Note: In these scenarios, the Runbook has typically executed nodes/logic but encountered issues (e.g. if Entities are not configured for monitoring/observability across all Data Sources, then it is possible that the Runbook will query a Data Source for information about an Entity configured on one Data Source but not on the queried Data Source)

-

-

-

[<ellipses>-menu]: Provides access to some Runbook operations:

-

[Rerun Runbook]: Clicking this will cause a new Runbook execution (which will update the history, and have its own associated Runbook Analysis)

-

[Open Runbook]: will open an embedded panel that shows a read-only rendering of the Runbook (i.e. all nodes, their interconnections, flow/logic, and visualizations). This view can help the User understand the path[s] of execution that generated the Runbook Analysis.

-

Note: the embedded panel also provides a link “Edit” (in upper-right) that will open the Runbook in the Runbook Editor (i.e. read/write access).

-

-

-

Note: There are rare scenarios where Runbook executions are throttled - in those cases the <Runbook - Overall Summary Information> will contain:

-

a [Runbook/History] pick-list that has a null-entry: “No Runbook Analysis Available”.

-

absence of [Runbook/Execution-status] icon.

-

a [Runbook/Execution-disposition] where [Last Run: Unknown]

-

-

-

<Runbook - Per-execution Detail Information> - Runbook execution initializes with the Detection/Trigger information supplied by Correlation, and then proceeds through: connected nodes, their interconnections, flow/logic, and finally visualizations. The paths through a Runbook for a particular Runbook execution determines what is rendered in the Runbook Analysis section. The Visualization Runbook nodes are the elements that surface the information into the Runbook Analysis Section - and currently support the following Visualization types:

-

[Table]

-

[Pie Chart]

-

[Bar Chart]

-

[Timeseries Chart]

-

[Bubble Chart]

-

[Correlation Chart]

-

[Cards]

-

[Gauges]

-

[Connection Graph]

-

[Text]

-

-

Incident Lifecycle

Incidents surface Detections as a report that contains all information gathered over the course of processing through the pipeline and the associated Runbook automation.

When first created, an Incident has [State: New], and the following areas of the Incident are populated with the information gathered by Ingest & Analytics, and Correlation:

-

[Priority: Low] <Default>

-

[Description]

-

[State]

-

[Start Timestamp]

-

[Ongoing]

-

[Primary Indicator]

-

[Correlated Indicators]

-

[Trigger Summary]

All [State] changes are initiated/managed by the User, and when a User is working an Incident they may decide to update the [State] as follows:

-

[State: Investigating]: To indicate that this Incident is undergoing active investigation.

-

[State: Closed]: To indicate that this Incident has been closed.

Additionally, over the course of its life, an Incident may undergo a variety of events:

-

[Impact Analysis Ready]: Runbook has executed, and Impact assessment has been completed - which populates/updates the following areas of the Incident:

-

[Priority]

-

[Impacts: Users]

-

[Impacts: Locations]

-

[Impacts: Applications]

-

[Prioritization Factors]

-

[Runbook/History]

-

[Runbook/Execution-status]

-

[Runbook/Execution-disposition]

-

Runbook Visualizations

-

-

[Incident Indicators Updated]: The Analytics Pipeline (Ingest & Analytics, and Correlation) have identified a recurrence (i.e. recurrence of Indicators related to existing Incident).

-

[Primary Indicator] (recurrence count)

-

[Correlated Indicators] (recurrence count and/or correlation start)

-

-

[Incident Note Added]: A User has added a Note to the Incident.

-

[Incident Note Updated]: A User has updated a Note on the Incident.

-

[Ongoing Incident Changed]: Incident has ended ([End Timestamp] is recorded), and the Incident is no longer [Ongoing] - which occurs when:

-

For performance-based Incidents: there has been no recurrence of associated Indicators after a period of time (e.g. 1-hour).

-

For state-based Incidents: some period of time after the last Indicator has entered the expected state.

-

-

[Incident Status Changed]: A User has changed the [Status] of the Incident.

Automation (LogIQ Engine)

The LogIQ engine executes automated investigations (Runbooks) associated with an event (detection/trigger), and attaches the resulting analysis to the incident report (e.g., assessed business impact, supporting data/context, etc.).

The determination of which Runbook to execute is based on the detection/trigger information gathered by correlation (which aligns with system level incident triggers) and any user-defined custom triggers:

-

System level incident triggers: Maps a specific Runbook to the supported system level incident triggers:

-

Device Down Issue: This trigger is intended to initiate the associated Runbook when an individual device has been identified as unreachable or has rebooted.

-

Triggering entity: Device (with its properties).

-

-

[Interface Performance Issue]: This trigger is intended to initiate the associated Runbook when an interface has been identified as down/unreachable, or is exhibiting performance degradation in terms of congestion, packet errors and packet drops.

-

Triggering entity: Interface (with its properties).

-

-

[Multi Device Down Issue]: This trigger is intended to initiate the associated Runbook when multiple devices have been identified as unreachable at the same location.

-

Triggering entity: List of devices (with their properties).

-

-

[Application Location Performance Issue]: This trigger is intended to initiate the associated Runbook when an application has exhibited possible performance degradation for a number of users at a specific location in terms of response time, retransmissions or lower network usage.

-

Triggering entity: Application and Location.

-

-

-

User-defined Custom Triggers: Provides a mechanism for Users to refine the system-level Incident Triggers in order to perform more targeted automated investigations (e.g. associate a specific Runbook to investigate Detection/Trigger where [Application: DNS]). The User can refine System-level Incident Triggers by configuring a Custom Trigger based on:

-

Properties (e.g. Application, Application Type, Application Version, Importance, Location, and Location_role …)

-

Metrics (e.g. Round Trip Time, Throughput, $ Retrans Packets, % Failed Connections, and User Response Time, …)

-

Trigger information (e.g. Entity Type, Triggering Metric, …)

-